Problem Statement

The blog is in continuation of my previous blog wherein we explored possible BPF related issues after migration from Dynamics 2016 to Dynamics 365.

In this blog we will explore the resolution strategies for all the BPF migration issue that we encountered in the last blog.

To refresh the context, mentioned below were the issues which we encountered when Dynamics was upgraded from 2016 to 365

Dynamics 365 BPF design change causing the mentioned below issues

- Each user may see a different active BPF on the same record. Causing user perception and usability issues.

- Out of box workflows triggering on change of process and stage id fields behaving weirdly.

- Out of box workflows doing comparison on stage id and process id field values giving different results for different users.

Resolution

Setting Same BPF for all Users in Dynamics 365

In Dynamics 365, by default “SetProcess” action or “SetProcessRequest” will only set the active BPF for the user in whose context the request is being executed and not for all the users present in Dynamics. There are two possible ways of tackling the situation

- Using a Workflow Code activity to execute SetProcessRequest for all the users in Dynamics

The idea behind this is to execute the “SetProcessRequest” for all the users in dynamics for the target record. Mentioned below is a code snippet for the same

Mentioned below is an analysis on Pros and Cons of this approach

PROS

- We can configure the workflow code activity to run when the record is created. It will be a synchronous operation and will reflect for all the users immediately.

CONS

- There is a 2-minute timeout constraint in the workflow assembly. Therefore, depending upon the number of users for which we want to set the same BPF, there are chances that the request will timeout.

- In the above example, I am looping through all the records in Dynamics. However, in real world scenario’s not all the users may have required license or access to the BPF record. Therefore, for those users, we will encounter exception in the request. To navigate through that, we will need to only pass the users that are essential for setting the same BPF.

- We will need to be careful when we are using the “SetProcessRequest”, because if there is already an active instance for the BPF against the target entity, it will not preserve the same stage value but rather then overwrite the previous instance of that BPF.

2. Using client side API to check the active BPF for the logged in User and changing it

In this approach, the idea is to check the BPF process which is currently active for the logged in user and changing it in form on load event depending upon the business requirement. Mentioned below is the JavaScript code snippet for the same

PROS

- The JavaScript code snippet can just be embedded in the form on load event and will be easy to maintain and change.

CONS

- After setting the correct BPF for the user, there will be a onetime reload of the form. This may cause some user experience issue as compared to the first approach.

- This approach will only change the BPF for the user, once they open the form. Therefore, at the backend, the system will continue to store different values of stage id and process id for each user.

- Due to this it is imperative that the mentioned below steps related to retrieving active stage and process are implemented instead of doing a direct comparison with the stage and process id fields.

Configuring out of box workflows triggering on change of stage and process id fields

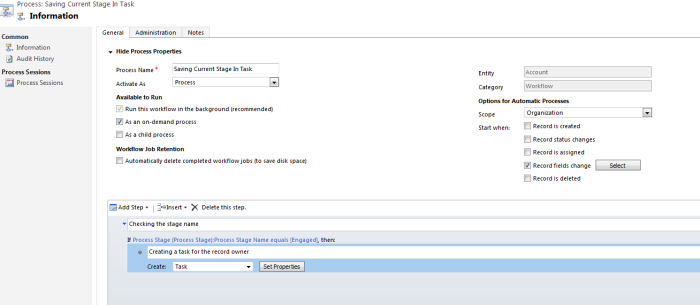

Till Dynamics 2016, it was a supported way to run workflows on update of stage id and process id values. For example, mentioned below is an out of box workflow which is triggering on update of stage and process values on a record.

However, in Dynamics 365, with the presence of multiple active BPF’s on an record, this will need to be changed. Mentioned below is the reason for the same.

- Suppose in CRM 2016, there were two BPF’s A and B on the record. At a time, only one could be active. Therefore, the above-mentioned workflow would have triggered only for the active process.

- However now there are multiple active BPF’s on the record. Therefore, the above process will trigger for each of the two BPF’s. This might cause some issue.

Due resolve this situation, we need to make the mentioned below changes in the workflow and the corresponding BPF in which we want to trigger the workflow

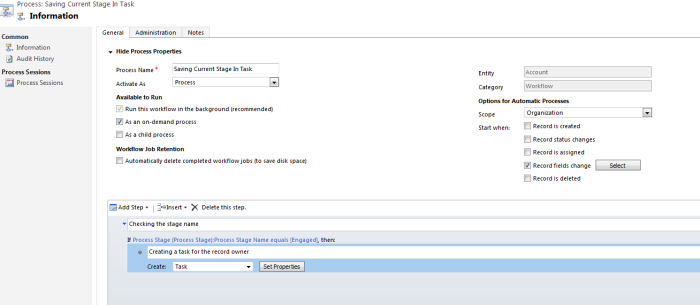

- Make the process On Demand and remove any on change events in it.

The first step which we need to do is to make the workflow on demand and remove any change events which are mentioned in the workflow

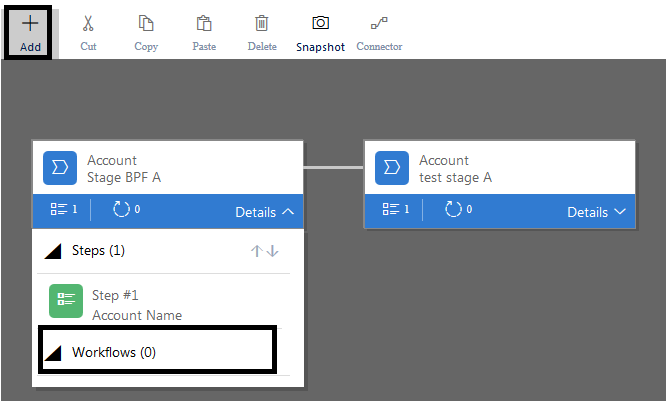

2. Calling the workflow from stage event in the BPF.

2. Calling the workflow from stage event in the BPF.

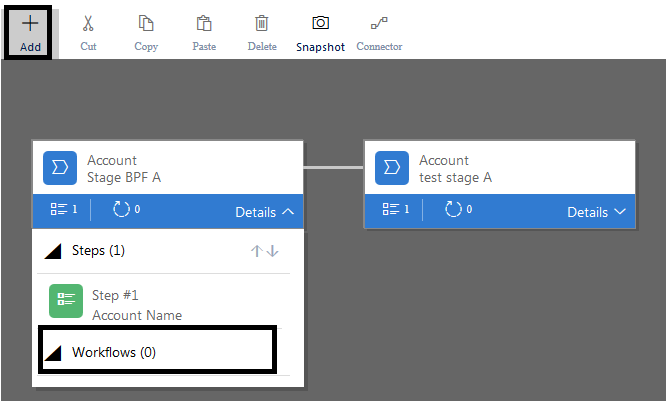

This is a newly introduced feature in BPF’s. In a BPF we can now add a step that will run a On Demand workflow when a stage in entered or exited. Mentioned below are the steps for the same

a) Select a stage and click on the “+ Add” button and select “Add Workflow”. Review that a workflow step is added on the stage.

a) Select a stage and click on the “+ Add” button and select “Add Workflow”. Review that a workflow step is added on the stage.

b) Now for the workflow step, select the appropriate Trigger and workflow. Trigger can assume two values “Stage Entry” and “Stage Exit”. In the workflow lookup, we can select any active on demand workflow set on the same entity as the BPF target entity. i.e. “account” in our example

b) Now for the workflow step, select the appropriate Trigger and workflow. Trigger can assume two values “Stage Entry” and “Stage Exit”. In the workflow lookup, we can select any active on demand workflow set on the same entity as the BPF target entity. i.e. “account” in our example

c) After specifying all the details, click on “Apply” and then “Validate” and “Update” the BPF.

Configuring out of box workflows doing active stage and process name comparison on the target entity

As described earlier with the advent of Dynamics 365, there can be multiple active BPF’s on the same target record. Moreover as discovered in the previous blog, each user may have a different value of stageid and processid value depending upon the active process set for that user.

Therefore, in our out of box workflows, we cannot do a direct comparison on the stage and process name values. Mentioned below screenshots describe the changes that we need to in these kind of workflows

a) Mentioned below is a screenshot showing a basic example of a workflow where we were comparing the values of stage and process name before executing a step

Any such workflows may cause some issues. Mentioned below steps shows how we can correct the above-mentioned issue.

b) While suggesting the solution, I am assuming that we are interested in finding out the current stage of the active instance of a particular BPF process

In this case, we need to write a custom workflow code activity to make the mentioned below C# sdk requests.

/// <summary>

/// Retrieving current process active stage as per the design changes introduced in Dynamics 365

/// </summary>

/// <param name=”service”></param>

/// <param name=”currentTargetRecordID”></param>

/// <param name=”currentTargetLogicalName”></param>

/// <returns></returns>

protected string RetrieveActiveStageName(IOrganizationService service, Guid currentTargetRecordID, string currentTargetLogicalName)

{

string activeProcessName = “”;

string activeStageName = “”;

// Retrieves all active BPF instances for an entity

RetrieveProcessInstancesRequest activeProcessReq = new RetrieveProcessInstancesRequest

{

EntityId = currentTargetRecordID,

EntityLogicalName = currentTargetLogicalName

};

RetrieveProcessInstancesResponse activeProcessResp = (RetrieveProcessInstancesResponse)service.Execute(activeProcessReq);

if (activeProcessResp.Processes != null)

{

if (activeProcessResp.Processes.Entities != null)

{

int processCount = activeProcessResp.Processes.Entities.Count;

if (processCount > 0)

{

for (int i = 0; i < activeProcessResp.Processes.Entities.Count; i++)

{

var processInstance = activeProcessResp.Processes.Entities[i];

activeProcessName = processInstance.Attributes[“name”].ToString();

// Display name of the BPF for which we need active stage

if (activeProcessName.Contains(“BPF A”))

{

var _activeStageId = new Guid(processInstance.Attributes[“processstageid”].ToString());

// Retrieving all the stages available in the BPF

RetrieveActivePathRequest pathReq = new RetrieveActivePathRequest

{

ProcessInstanceId = processInstance.Id

};

RetrieveActivePathResponse pathResp = (RetrieveActivePathResponse)service.Execute(pathReq);

// Display name of the BPF for which we need active stage Looping through the stages and selecting the active stage

for (int j = 0; j < pathResp.ProcessStages.Entities.Count; j++)

{ pathResp.ProcessStages.Entities[j].Attributes[“stagename”],

pathResp.ProcessStages.Entities[j].Attributes[“processstageid”]);

// Retrieve the active stage name and active stage position based on the activeStageId for the process instance

if (pathResp.ProcessStages.Entities[j].Attributes[“processstageid”].ToString() == _activeStageId.ToString())

{

activeStageName = pathResp.ProcessStages.Entities[j].Attributes[“stagename”].ToString();

}

}

break;

}

}

}

}

}

return activeStageName;

}

We will then add the above-mentioned code as a workflow code assembly and register it as a step in the out of box workflow

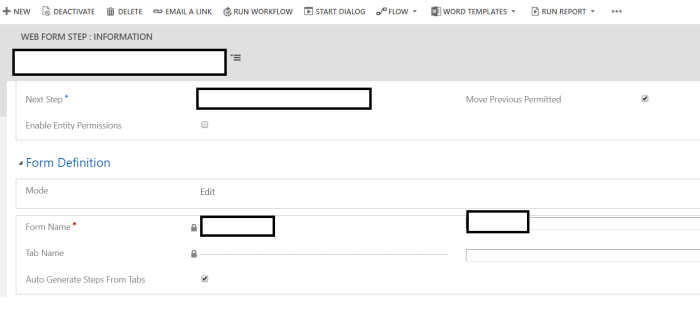

2. Calling the workflow from stage event in the BPF.

2. Calling the workflow from stage event in the BPF. a) Select a stage and click on the “+ Add” button and select “Add Workflow”. Review that a workflow step is added on the stage.

a) Select a stage and click on the “+ Add” button and select “Add Workflow”. Review that a workflow step is added on the stage. b) Now for the workflow step, select the appropriate Trigger and workflow. Trigger can assume two values “Stage Entry” and “Stage Exit”. In the workflow lookup, we can select any active on demand workflow set on the same entity as the BPF target entity. i.e. “account” in our example

b) Now for the workflow step, select the appropriate Trigger and workflow. Trigger can assume two values “Stage Entry” and “Stage Exit”. In the workflow lookup, we can select any active on demand workflow set on the same entity as the BPF target entity. i.e. “account” in our example